XtremIO and VMware Path Failure Detection

In XtremIO XIOS version 4.0.10 we made an (optional) change to the SCSI error codes that the array returns to ESX hosts under certain circumstances. To understand the difference, we first need to understand a little about how VMware acts when a LUN disappears for some reason.

When VMware loses all paths to a LUN there’s 2 different states that it can put that device into – "All Paths Down" (APD) or "Permanent Device Loss" (PDL). Which of these states is chosen depends on how the LUN fails - whether it simply stops responding down all paths (in which case it'll always be APD), or if it starts sending certain SCSI error codes (in which case it can go into either APD or PDL, depending on the errors returned)

With APD, the VMware host will continue to try and access the device, including holding/retrying any outstanding IO for that device. If/when the device comes back, it will handle the device return relatively cleanly, and although a reboot of the guest OS may be required, there should be no need to reboot the VMware host. However during the period the device is unavailable, the VMware host may become unresponsive (although other guests should continue to run without issue). If the device never comes back, then you will likely require a reboot of the VMware server to recover.

With PDL, VMware basically writes off the device as gone for good. Any outstanding IO will be thrown away (and the guest OS will be told they failed). If/when the device comes back, VMware will generally not attempt to use it, and the only way to get it to recognize the LUN again is to reboot the VMware host. During the period the device is not available (and after it comes back) the VMware host itself will be completely healthy, and there will be no impact at all to the host or to any others guests running on it (right up until the point you need to reboot!)

Historically when an XtremIO array was shutdown for any reason (both user initiated, and where the array shuts down due to a loss of power/redundancy/etc) we sent the SCSI responses that caused VMware to put the affected devices into a PDL state. This worked well in that the ESXi server stays responsive and healthy, but it meant that once the array recovered (which could be as soon as a few minutes later) ALL VMware servers connected to it would need to be rebooted in order to re-gain access the these LUNs. This has caused some customers pain, especially where the array recovered quickly (such as after a short power drop).

In version 4.0.10 we’ve added the ability to change this behavior so that instead VMware will put these devices into an APD state. For now, the default behavior stays as before (PDL), but you can change it to APD using the modify-clusters-parameters command :

xmcli (admin)> modify-clusters-parameters esx-device-connectivity-mode="apd"

Modified Cluster Parameters

Not surprisingly, you can also check the current value for this option using show-clusters-parameters :

xmcli (admin)> show-clusters-parameters

Cluster-Name Index ODX-Mode iSCSI-TCP-Port Obfuscate-Debug-Info Debug-Info-Creation-Timeout Send-SNMP-Heartbeat ESX-Device-Connectivity-Mode

xtremio4 1 enabled 3260 disabled normal True apd

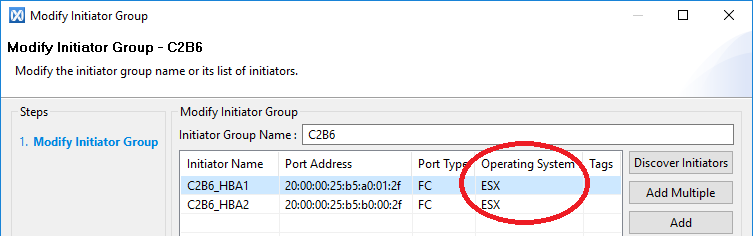

Note that this will ONLY change the behavior for initiators that have their OS type configured as ESX – if it’s set to the default of “Other” or any other value then there will be no change. This also means that it’s more critical than ever to make sure that the initiator OS type is set correctly.

You can find more details about the difference between APD and PDL at https://kb.vmware.com/kb/2004684

Native Multi-Pathing v's PowerPath

The options mentioned above are relevant for both VMware Native Multi-Pathing (NMP) as well as EMC PowerPath Virtual Edition (PP/VE).

However with PP/VE there's an additional benefit to using "APD" mode. On rare occasion PP/VE has been seen to offline a LUN when it should only offline a path. We're not sure why this happens, and it's been raised with the Powerpath team to investigate, but the good news is that this doesn't happen using "APD" mode, so making that change will remove any chances of ever hitting this problem.

Making The Change

At some stage in the not to distant future we'll change the default setting of this value for newly installed arrays. Until that happens, and for existing arrays, my recommendation is to change to "APD" mode in all situations except (possibly) one.

If you have VMware guests that access storage from XtremIO and from another array, AND those hosts could continue functioning (in at least a limited fashion) if the XtremIO storage was unavailable, then you'll likely be better with the PDL behavior as it will make it a little more likely that VMware and your guests will be able to survive the XtremIO LUNs disappearing. It does mean that you'll most likely need to reboot the ESX hosts when XtremIO recovers, but that's the price you'll pay for being able to keep your guests running during the outage.

In all other cases, APD is the preferred option - which is exactly why we intend to change the default behavior to that going forward.

What Actually Changed?

Behind the scenes, the change that was made is to the SCSI code the storage controllers send to the host when they are shutting down. As XtremIO's Storage Controllers are protected by battery backups units, even for something like a power outage the array is able to communicate with the host during such a shutdown (presuming of course that the host is still up, and hasn't disappeared itself due to the power outage!)

Depending on which mode is selected, the array sends a slightly different SCSI error code to any requests whilst it is in the process of shutting down. When these errors are only sent down some of the paths the LUN is available on (eg, when shutting down or rebooting a single storage controller) VMware takes exactly the same action - it marks that path as unavailable, and continues to access the LUN via other paths.

However if VMware receives these error codes via ALL paths to a LUN - such as would occur when shutting down the entire array - then VMware will act differently for the two error codes. For the SCSI error sent when in "pdl" mode, VMware will mark the device as being in PDL state. For the error sent in "apd" mode, VMware will mark the device as being in APD state.

To be clear, although we've called these states "apd" and "pdl" on the array side, it's technically not XtremIO that sets these states on VMware - we've just picked names for the options that correspond to what VMware does when we send the specific error codes to the host. VMware interprets those codes and makes the decision between PDL or APD.