Adding Volumes to XtremIO Consistency Groups

XtremIO 4.0 added the concepts of Consistency Groups and Snapshot Sets. These were added mainly to simplify the flow when creating new snapshots of multiple volumes, and more importantly when refreshing/restoring those snapshots.

Volumes are added to a Consistency Group, a snapshot of that CG is taken (and thus a snapshot is created for each of the volumes in it), and the resulting snapshots are grouped together in an automatically created snapshot set. Take another snapshot of that same CG, and you'll get a new Snapshot Set created for those new snapshots.

Refreshing the snapshot from the original volumes is simply a matter of selecting the Consistency Group and the Snapshot Set, and the array will handle refreshing each of the volumes/snapshots involved - there's no need to do anything at the individual volume level.

If a new volumes is added to a Consistency Group, and then an existing Snapshot Set is refreshed, then we obviously have a conflict - the Consistency Group has (say) 5 volumes it in, whilst the Snapshot Set it's being refreshed to only has 4. The good news is that this is handled automatically and transparently by the array - in addition to refreshing the existing volume(s), it will create new snapshots for any new volumes that have been added to the CG since the last refresh.

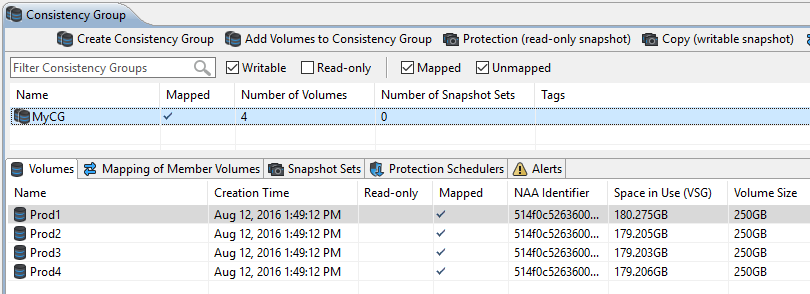

For example, I start with my CG with 4 volumes in it :

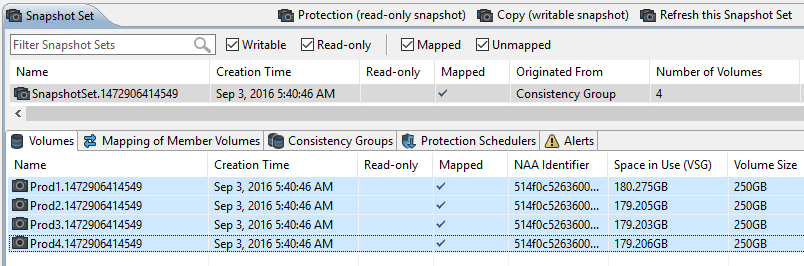

Creating a new snapshot of this CG results in a Snapshot Set containing my 4 new snapshots, as expected, which I then map to my host :

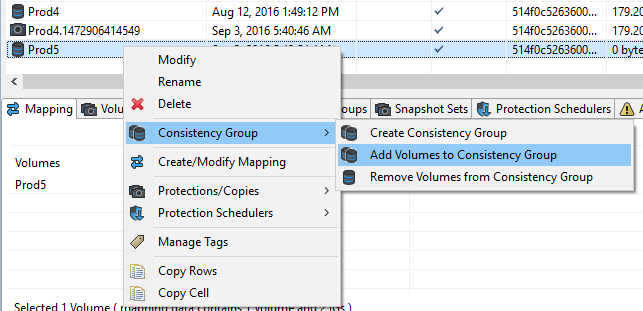

When at a later time I add a 5th volume to the database, I can easily add it to the CG :

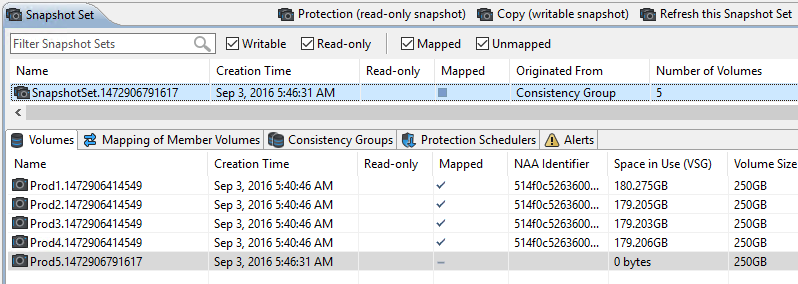

The next time I do a refresh, the new snapshots is automatically create for me, and put in the Snapshot Set (Note that the creation time on Prod5 is later than for the other volumes as it was created during the refresh, not when the original snapshot was taken) :

But can you see the problem? The new volume isn't mapped. The system has no way of knowing if that volume should be mapped, or to where.

Catch-22

The problem is we've got a catch-22. We can't map the volume until we've refreshed the snapshot set. But if the refresh happens during a scripted process, then we don't get a chance to do the mapping before the host will try and access the extra disk and find it's not there.

Unfortunately there is no real solution to this problem at the moment. You might think you can just take a snapshot of the new volume and assign that to the host, but the issue is that there's no way to add that snapshot to the snapshot set, so when you do the refresh it will not refresh that volume, and will still go ahead and create a new snapshots in the SS.

The Short-Term Solutions

If you're doing the refresh manually then the solution is simple - just include an extra step to map any new snapshots when you do the refresh.

The issue becomes when you're trying to automate the refresh process. Today there's 3 ways that you can handle this situation :

-

Do the refresh manually the first time after you add a new volume to the consistency group. Doing it manually allows you to handle the mapping, and then any future refreshes will complete without issue.

-

Automate the mapping of the new volumes. This would involve checking to see if any new volumes were added when the refresh occurs (eg, checking the volumes in the snapshot set before and after the refresh), and if any new volumes were added then mapping them to the same host as the other volumes. This would involve a moderate amount of scripting, but at the end of the day wouldn't be all that difficult.

-

Use AppSync! AppSync will automatically handle detecting which volumes are in use and mapping them, so does not have this issue.

The Long-Term Solution

We are planning some changes to the way Snapshot Sets and Consistency Groups work to avoid this problem in the future. Stay tuned for more details on those changes...