Parsing XtremIO CLI Output

I generally don't recommend people use the XtremIO CLI for programmatic access - it's just so much easier and better to use the REST API.

However there are occasions where using the CLI may make sense. Access to the CLI can be automated via SSH, and sending commands is relatively trivial - right up until it becomes time to parse the output from those commands.

$ ssh scott@xms 'add-volume vol-name="MyVol1" vol-size="1t"'

Added Volume MyVol1 [29]

The normal output from CLI commands that return data is a series of columns, which at first might appear easy to parse. For example, if I pick one of the more simple commands as an example we see something like this :

xmcli (admin)> show-bricks

Brick-Name Index Cluster-Name Index State

X1 1 xtremio4 1 in_sys

X2 2 xtremio4 1 in_sys

5 columns, each of them with a single entry in them, and each what appears to be a fixed width. The problem is that none of those assumptions are necessarily true! Looks at what happens if I rename my cluster to have a longer name containing a space :

xmcli (admin)> show-bricks

Brick-Name Index Cluster-Name Index State

X1 1 XtremIO Cluster 4 1 in_sys

X2 2 XtremIO Cluster 4 1 in_sys

As the entry in the field increases, the size of the field grows to accommodate it. Although not shown here, there's an additional issue that fields where there is no value will simply show as blank, so between that and the spaces within the values we can't use field location to parse the entries. This rules out basically every traditional way of parsing such output!

Thankfully there is a pattern to the output, and thus a way to parse it. Although there may be spaces in the fields themselves, the field headings do NOT have spaces within them - and there's guaranteed always to be at least one (and frequently more) spaces between the headings. That number of spaces can change over time as the values change - there will always be at least one, and the columns below them will always align with the first character in the heading.

Taking the second show-bricks output above and looking at it's header line we have :

Brick-Name Index Cluster-Name Index State

^ ^ ^ ^ ^

1 12 18 36 42

We can then uses the values to parse the data below it into the relevant fields.

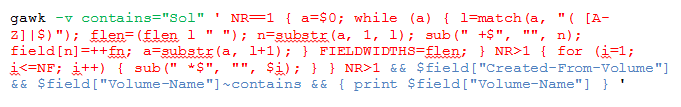

A relatively easy way to do this is to use the block of "gawk" code below. Not easy because it's easy to understand - just easy because I've already written it for you!

The part of the command in red (including the "NR>1") should be left unchanged - this is the part of the code that splits the fields based on the header field lengths.

The part in blue is the actual action - in this case it's matching fields from the output - confirming that the field "Created-From-Volume" is non-zero, and that the field "Volume-Name" contains the substring from the variable "contains". It then prints the value of the "Volume-Name" field if it matches.

The green section at the start sets the variable "contains" to "Sol". In this case I could have just as easily hard-coded that value, but if you're passing in shell variables it's much easier doing it as it's shown here - set a gawk variable to the value you want, and then use that within the gawk script.

The above obviously isn't very easy to read, so here's an easier version to read :

gawk -v contains="$backup" '

NR==1 {

a=$0;

while (a) {

l=match(a, "( [A-Z]|$)");

flen=(flen l " ");

n=substr(a, 1, l);

sub(" +$", "", n);

field[n]=++fn;

a=substr(a, l+1);

}

FIELDWIDTHS=flen;

}

NR>1 {

for (i=1; i<=NF; i++) {

sub(" *$", "", $i);

}

}

NR>1 && $field["Created-From-Volume"] && $field["Volume-Name"]~$contains {

print $field["Volume-Name"]

} '

Note that the above REQUIRES GNU Awk (gawk). It uses features that are not available in standard "awk" (specifically the FIELDWIDTHS variable, if I recall correctly).

Obviously this is still fairly complex, and that's even without adding in any error checking - but if you must automate things via the CLI it at least makes it possible...