Friends Don't Let Friends Run Oracle on ZFS

I frequently get asked why I recommend against running Oracle DBMS on ZFS, and instead recommend using ASM. The filesystem v's ASM (or filesystem v's raw device) is a decision that people have had to make with databases for dozens of years. Over that time, in general, filesystem performance has improved to the point where generally there is only a small performance gap between "raw" device performance and filesystem performance - especially when using features like Direct IO and Concurrent IO which allows the IO to bypass much of the overhead that filesystems add. Unfortunately ZFS is very much an exception to that rule.

To show the difference I setup 3 databases on Solaris X86 systems. The first was using Oracle ASM. The second, ZFS with a default ashift value of 9 (more on that in a moment). The third, ZFS with an ashift value of 13. In all cases the same number/size of LUNs were used - 4 LUNs for data, and a further 4 for redo, with all LUNs being presented from an XtremIO Dual Brick array. For both of the ZFS systems, Oracle's best practices for ZFS configuration were followed - 8KB block size and logbias of throughput for data, 1MB block size and logbias of latency for for redo.

ASHIFT?!?

Unlike most filesystems, ZFS don't have a specific setting for the block size that it will use. Instead it uses a parameter called "ashift", which, when used as a power-of-two will give the effective block size. By default, earlier version of Solaris will default to an ashift value of 9, giving an effective block size of 2^9, or 512 bytes - matching the sector size that traditional hard disks would present.

However most new hard disks, and all flash-based media, use a larger sector size - normally at least 4k for raw media, and commonly even larger for storage arrays. For example, XtremIO uses an internal 8k block size. A mis-match between the ashift value and the underlying storage block size will not appear to be a problem as the media/array will handle the mismatch, however there will be some level of performance impact - often a significant one - as a result.

Unfortunately unlike the open-source versions of ZFS, the Solaris version of ZFS does not allow you to specify an ashift value when creating a zpool. Instead Solaris will use "physical block size" of the first disk added to the pool to set the ashift value for the entire pool. The problem with this approach is that for legacy reasons most storage products do not present their real physical block size to the host, but instead default to a legacy 512 bytes. Whilst this might sound like a failing of the array, it's done for host compatibility reasons. For example, presenting a LUN with an 8K sector size to some version of Solaris will crash the OS, whilst other OSes (eg, VMware) will simply fail to see any disk that reports a sector size other than 512 bytes.

This means that by default, basically all zpools under Solaris will end up with an ashift value of 9. Newer version of Solaris will sometimes do a better job of determining the correct value, but it's still far from reliable. Starting with Solaris 11.2 (or earlier versions with patches) it is possible to trick Solaris into using a different ashift value by manually overriding the "physical-block-size" setting for a disk via the ssd.conf file. For XtremIO, this setting is a part of the standard Host Configuration Guide settings for Solaris 11.2, and results in the OS using a physical block size setting of 8192 bytes, which causes an ashift value of 13 (2^13=8192) - leading to the ZFS block size perfectly aligning with the array block size.

Keep in mind that the the ashift value is set by the first disk added to the zpool, and can not be changed after the pool is created. This has two serious implications - the first being that any pools migrated from 512-byte based storage to a 4k or larger based storage will retain the old ashift value - even if you change the physical-block-size setting. The second is that any pool created on systems upgraded from Solaris versions below 11.2 (where ashift can not be controlled, even by setting the physical block size) to Solaris 11.2 will also be stuck on the old ashift value. The only way to change the ashift of the pool is to completely start again with a new pool, and then manually migrate the data between the old and new pools.

Test Environment

Back to our 3 test environments. As mentioned above, I setup three Solaris 11.3 X86 systems, all were running Oracle 12.1.0.2. The ASM system was also running Grid 12.1.0.2. With the exception of the storage differences all systems and database instances were configured the same in every way.

SLOB was used to carry out the performance testing, with each database configured with a SLOB SCALE setting of "5G" (giving a tablespace which was around 750 GB per system). As SLOB does not run natively on Solaris, I also used a 4th host running Linux which was used to actually run SLOB, connecting to the Oracle instances on each of the 3 Solaris systems.

All tests were run for 5 minutes, with an UPDATEPCT setting of 25, meaning that 25% of all operations were updates, whilst the remaining 75% were selects. 64 SLOB workers were used for all tests.

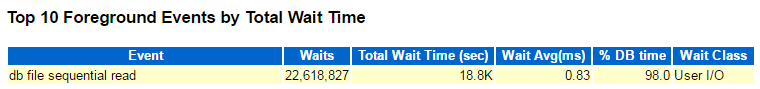

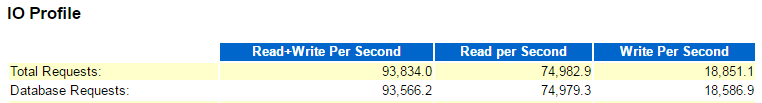

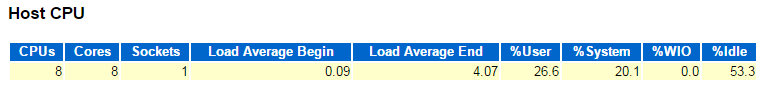

Results - ASM

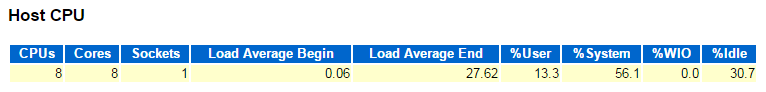

As expected, ASM gave excellent results. "db file sequential read" (a great indicator of array latency) was well below 1ms, and accounted for 98% of total DB time - exactly what you like to see for a SLOB run. Total sustained requests/second was just over 93,000, and the overall CPU utilization at the host level was just under 50% (with around 20% of that being system time - basically the time the OS is handling things like IO)

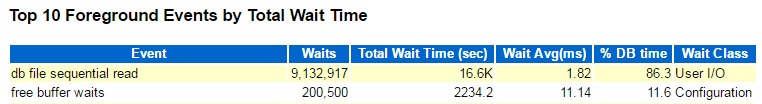

ZFS - Unaligned

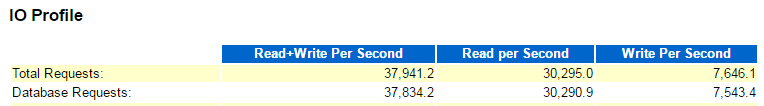

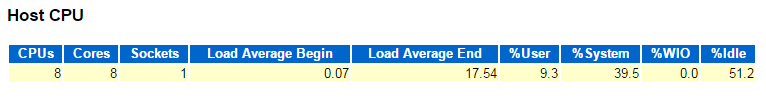

The results for ZFS with an ashift of 9 were far less impressive. "db file sequential read" times jumped up to 1.82ms - over double that of ASM. We also started to see more contention ("free buffer waits") implying significantly lower write performance, despite Oracle being configured with a relatively high number of DB writer threads (12). Total request/second dropped to around 38k (a 60% reduction from ASM), whilst the host CPU utilization was unchanged at around 50% (however this time with around 40% being attributed to system time).

ZFS - Aligned

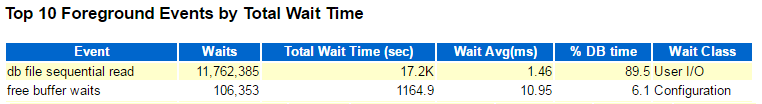

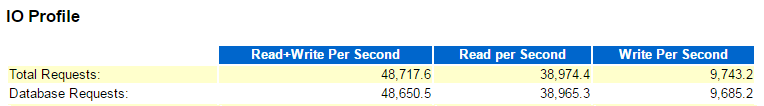

ZFS with an ashift of 13 (8k, matching the internal XtremIO block size) showed better results, but still not as good as ASM. "db file sequential read" dropped a little to 1.46ms (still around 75% higher than ASM). Total request/second climbed up to 48K (around half of ASM), but this time host CPU jumped to around 70% (with 56% system).

From the Array Perspective

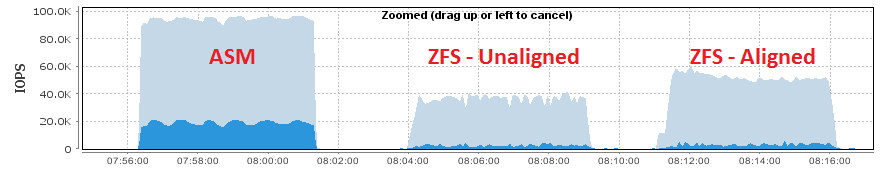

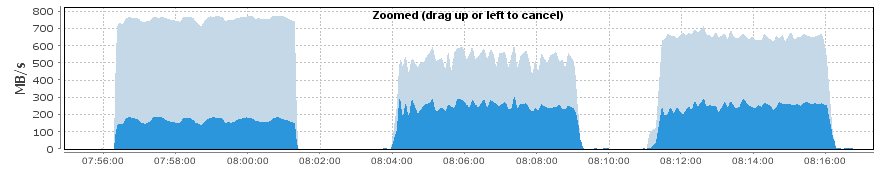

The IOPS seen from the XtremIO during this testing showed some further interesting details. IOPS were lower for the 2 ZFS runs as expected, however write IOPS in particular were far lower implying that ZFS was doing more write coalescing resulting in larger average IO sizes.

However in terms of bandwidth the opposite was true - despite generating fewer IOPS and fewer database requests/second, the bandwidth of writes was noticeably higher for ZFS than for ASM.

Conclusion

There's a few different things to consider in these results. The first is obvious - Oracle performance drops significantly when using ZFS rather than ASM, and drops even further if your ZFS filesystem doesn't have the correct ashift value for the storage in use. Even with the correct alignment, ZFS performance was around half that of ASM.

However more interestingly was the CPU increase when using ZFS. Despite only being able to sustain half as many IOPS, the ZFS system used almost 50% more CPU than ASM. Using additional CPU isn't a good thing for any environment, but when you're talking about an Oracle environment where licensing fees are generally charged per CPU, that additional 50% increase in CPU utilization will also mean a non-trivial increase in licensing costs (and remember, that's a 50% increase in CPU in order to carry out half the amount of work!)

There is simply no sensible reason for running Oracle on top of ZFS. The performance impact of doing so is significant, and more importantly the long-term cost of using ZFS in terms of additional required resource and additional Oracle licensing fees far outweighs any costs of migrating to ASM.