XtremIO X1 and VMware - APD v’s PDL

In general, when a Storage Controller is shutting down for any reason, XtremIO X1 will send a "Logical Unit Not Supported" SCSI response code (0x5/0x25) in response to any requests received by that storage controller from a connected host. This could be just a single SC shutdown (eg, during an upgrade, where SC's are rebooted one at a time), or during an entire array shutdown (eg, where power has been lost and all Storage Controllers are running on battery).

For most operating systems, this gives the behavior that we want - the Multipathing software on the host will mark that one path as down, and continue to use the other paths. For a complete array shutdown (eg, power outage), the host will end up marking all of the paths down, but will continue to try using them and will recover the paths once the array is available again.

However VMware ESXi attempts to be a little more intelligent when it looses connectivity to a LUN in situations like this, and can actually put the device into one of two different states when it loses all paths to that device - "All Paths Down" (APD), or "Permanent Device Loss" (PDL). VMware have a KB article and a blog post that cover some of the differences between these two states, but simplistically the difference is that with APD you expect the LUN to return at a later stage, whilst with PDL the expectation is that it will not return (as the name implies!)

In general, the only way to recover from a PDL state is to reboot the VMware host, which can obviously be a fairly intrusive action. With APD, the devices will generally recover once at least one path to the device is recovered.

APD option in XtremIO

Given the potential impact and extended recovery from LUNs going into PDL state, in XtremIO XIOS 4.0.10 we added the ability to change the SCSI codes that the array sends to VMware hosts during a Storage Controller shutdown. Instead of sending a SCSI "Logical Unit Not Supported" response like we do for most OS'es, we instead send a SCSI "Transport Disrupted" response, which has the same immediate impact on the VMware side (path is taken offline), however if all paths fail, VMware will put the devices into "All Paths Down" state, rather than PDL.

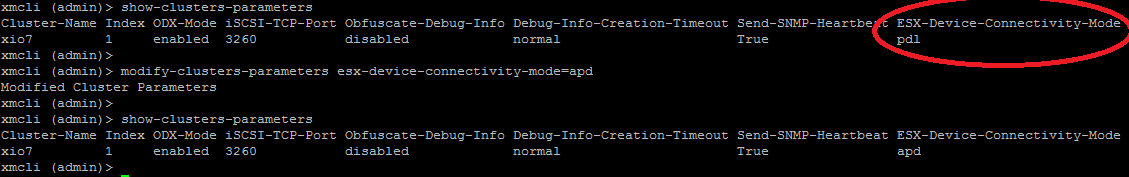

For backwards compatibility reasons we didn't change the default behavior, but instead added a new configuration setting to allow admins to select if they wanted to old (PDL) or new (APD) behavior. The current setting can be viewed/changed using the "show-clusters-parameters" and "modify-clusters-parameters" commands from the XMS CLI :

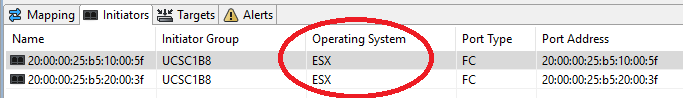

As these settings are for VMware ESXi only, they only apply to initiators that have been configured with an Operating System of "ESX". Initiators configured with any other OS, including the default of "Other" will still follow the old "PDL" behavour, regardless of what the clusters ESX Device Connectivity Mode is set to.

Default Behavior

As mentioned above, when we added this setting we did not change the default behavior. All existing and new systems kept the "PDL" behavior unless it was explicitly changed.

In XIOS version 4.0.25-22 (and later) we changed the default behavior, but only for newly installed clusters. Existing clusters still retain the PDL behavior unless they are changed, however any clusters installed with 4.0.25 or later will default to the APD behavior.

VMware Multipathing Bugs

These changes were originally only really relevant for situations where the entire XtremIO cluster was shutdown, such as a planned outage or a power outage. However over the past few years VMware ESXi Native Multipathing has had two bugs that have made these changes relevant for other situations as well. The first of these was in ESXi 6.0u2, whilst the second was in specific patches across multiple versions of ESXi.

In both of these situations, ESXi acts incorrectly when it receives a "Logical Unit Not Supported" response, with the end result that it can incorrectly fail all paths to the storage, resulting in a host-side Data Unavailability situation - even though the array is fully functional. These issues can be triggered by many different arrays (generally Active/Active multipath arrays), including XtremIO X1.

Although these are bugs on the ESXi side, and they can be avoided by applying VMware patches (at the time of writing, the patches for the second issue are still pending), changing the ESXi path policy will also avoid triggering them as we will no longer send a "Logical Unit Not Supported" which is the only SCSI response that triggers this issue.

Best Practice

In general, the best practice for this setting would be the configure it to "apd".

If you're running one of the affected VMware ESXi versions and are planning an XtremIO upgrade, then there's basically no choice - without changing this setting the upgrade will result in ESXi incorrectly deciding that all paths to the storage are down, and result in a host-side data unavailability state.

If you're not running one of those versions, or not planning an upgrade, it's still generally recommended to change to "apd".

As this setting only affects what happens when a storage controller is being shutdown, there's no impact on the operation of the array when making that change. If the "Operating System" setting for the initiators is not correctly set to "ESX" within XtremIO you'll also need to change that - which can also be done on the fly without any impact (similar to the APD/PDL setting, this setting only has impact when an SC is shutting down for some reason).

How about X2?

XtremIO X2 acts differently when shutting down a storage controller. For unplanned outages like power failure, the Storage Controller will immediately stop responding (rather than running on BBU's during the shutdown process like XtremIO X1 did). As a result, the host will simple see the path drop, and will act accordingly.

For planned Storage Controller shutdowns (including upgrades), the behavior is similar - the array will drop the port, which the host will detect and multipathing will offline the path.

In both cases, this results in an 'APD-style' behavior on ESXi, so whilst the mechanism is different, the end result is the same as the new behavior on XtremIO X1.